John Batterbee, formerly technology solutions director at Costain, joined Atkins in April to lead its new applied technology practice. He talks to Denise Chevin about his plans for the practice and the technology we’ll be seeing more of.

Tell us more about the new practice

The applied technology practice is essentially a centre of excellence for data, digital and technology skills. We’ve got just under 300 people in the team at the moment, but it’s growing very fast and we’re actively recruiting.

We work on solving interesting and complex client challenges, in aerospace, defence, security, government and critical national infrastructure.

It’s a brand-new team, with a focus on applied technology, but we have transferred into that team some of our existing experts in areas such as enterprise architecture, data science and geospatial.

What kind of services do you offer?

We provide services across the end-to-end life cycle, from the upfront strategy and architecture, through technology solution and service delivery, and ongoing data-driven performance improvement.

For example, we’re working with a client at Heathrow on a biometric security solution, enabling 40 million passengers to pass through Heathrow quickly and efficiently, with a single record of their access throughout the airport. We look at the data, and use that to identify ways to improve performance of the service, and improve the efficiency and effectiveness of the customer experience.

Another example is digital lineside inspection in the rail industry, which is traditionally a very manual process, with people undertaking inspections looking for encroachment onto the railway network of trees and undergrowth and so on. Using data analysis to identify vegetation encroachment we’ve been able to demonstrate how to cut on-foot inspections by 80%, which has a significant cost benefit as well as reducing the risk of disruption.

What’s your USP?

Where the practice is uniquely able to help clients with digital transformation is at the intersection between physical engineering – understanding the real world – and digital engineering – understanding the digital world, the data, the IT systems.

‘Commercial models and procurement approaches that don’t always incentivise or reward the supply ecosystem for using digital tools to drive enhanced efficiency.’

I would say it’s also about understanding the full spectrum, from the operational technologies that link out to the physical assets, all the way through to information technologies that enable the enterprise business processes. There aren’t that many companies that understand the integration of operational tech and information tech to the extent we do.

Tell us more about yourself?

Before joining Atkins, I was the technology solutions director at Costain, where I worked across energy, transport, and water industries. My role was about helping clients enhance the customer experience, increase productivity, and increase resilience, through use of technology. For example, I worked on leading-edge projects reducing accidents through the use of connected/automated vehicles.

Prior to that I worked as chief engineer and programme manager at a joint venture energy company – backed by major energy companies, including Shell, BP, EDF – accelerating the journey towards net zero carbon. That was focused on things like smart grid solutions, geospatial planning and data-driven services.

I moved there from QinetiQ, leading a range of tech development projects protecting national security. I joined the company after graduating with a Master of Engineering degree at Southampton University.

How is your group developing AI?

We’re applying machine learning into our programme management office and services, to continuously and automatically identify and analyse project data so that we can predict which activities might either overrun or underperform.

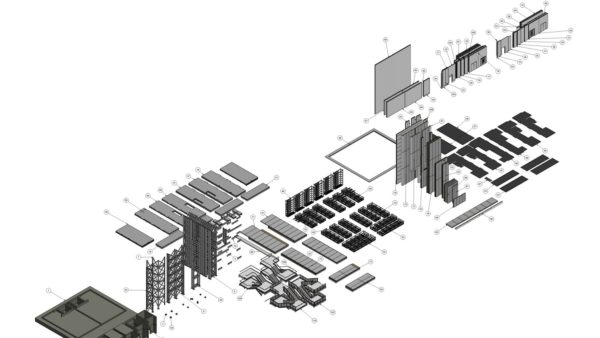

In the delivery stage, today we’re seeing more and more projects underpinned by a common data environment, and a supporting toolset to align stakeholders and streamline the design delivery processes.

I see this evolving, to enhance safety and improve efficiency, through things like augmented intelligence, derived from real time connected plants, and connected worker data sources. So, you could be getting real-time data on the health and stress levels of individuals, from connected worker technologies – things like the Fitbit that people already have, that generate a lot of insight on the health and well being of individuals, so that when compiled and analysed, can enable us to deliver a better working environment and better safety outcomes.

In the operations and maintenance stage, there’s a lot of data in models that are generated in the design and delivery stage that often don’t get used later in the lifecycle. And in the future, I see this data in models and data from real-time sensors being combined into an enduring digital twin.

When we’re planning that, it’s quite common to see efficiency gains of 20% and more. So, it’s quite common that significant improvements in efficiency performance can be achieved by use of a digital twin approach. Similarly, increased asset resilience can be achieved through condition-based maintenance – looking at the active condition, how an asset is being used, and using that to drive predictive and preventative maintenance, rather than a ‘fix on fail’ approach.

But there are certainly different levels of maturity across different industries. In aerospace for example, having a really detailed model of jet engines, and real-time sensor data from those engines that’s feeding into a central platform is normal. Clearly that is not yet the case in construction.

What would you say are the barriers to the more effective use of technology for efficiency gains?

For me, there are three big headlines when it comes to barriers. The first one is commercial models and procurement approaches that don’t always incentivise or reward the supply ecosystem for using digital tools to drive enhanced efficiency. Commercial rewards for achieving the outcomes safely at lower costs and with less environmental impact is probably the most important thing. Because if you’re not incentivised to use digital tools with better outcomes, then why would you?

The second is there’s loads of great tools and tech, but realising the benefits is about getting the right behaviours. That’s all about skills development and programmes and engaging the whole supply chain. Because, if you look at complex programs, very rarely is it one or two organisations. It’s typically a large number of organisations, and they all need to be operating at a similar level of maturity.

‘Something that’s becoming really interesting is that more and more major projects are establishing a common data environment as the foundation for the project.’

The third barrier would be the increasing availability of data. There’s a big challenge around interoperability of that data, and the commercial incentives to share that data. We believe that we need a common set of data standards, rather than a competing set of data standards, because otherwise we can’t share data across different areas of the supply chain, with different clients, which limits our ability to apply things like AI to historical data to predict outcomes for the future. All these barriers need to be addressed.

Is the situation with common data getting better?

Yes, I think quite significantly actually, but there is still a long way to go. Part of this comes back to the first barrier, the commercial incentives or disincentives. If there was a perceived commercial sensitivity around certain data for example, that can create a barrier to sharing that data.

But there are other models of how data can be shared, including data trust type models, where the data is put into a central data trust. It can be anonymised, aggregated and analysed, but can’t be traced by other competing organisations back to its original source.

What else are you getting excited about?

Something that’s becoming really interesting is that more and more major projects are establishing a common data environment as the foundation for the project. And that’s exciting because it’s the foundation for everything else. If we can get the data in an organised way, that’s accessible and available, curated over a longer time horizon, and available between projects, not just within an individual project, it enables us to do a whole load of new stuff, about how we analyse that data, how we can apply AI to create predictive insight and new ways of designing and operating assets better.

The second thing, and this is getting more into the ‘kit’ world, is how we’re getting new ways of gathering data about the world, to derive design decisions. Things like satellite imagery to assess biodiversity impacts on a wider scale than we’ve been able to do before, and monitor on a scale and in a way that we haven’t been able to do before.

The advance of natural language processing is also opening up a great number of opportunities. We’re able to take what might be thousands and thousands of pages of documentation that might be completely unstructured, and derive insight from that, through natural language processing. Things like requirement documents, things that you need to spend a long time plowing into the details of.

And the other one would be wearable tech, and connected plant tech. That has started to connect real-time insights on human performance out in the field. From past experience, you can find significant ways of improving efficiency and productivity when you realise, for example, that people are waiting around because of material delivery delays that are caused by something like how lunch breaks are scheduled.

But the last one, which is increasingly important to us, is virtual and augmented reality. Because it’s all very well and good having lots of data, but if we can’t make that data accessible to the right people at the right time, it’s of no use.

A great example would be using augmented reality apps where you can take a smart phone to a particular site, and point it at a particular object, and by georeferencing, it automatically pulls the right data out of the central dataset, and puts that augmented as an overlay for a maintenance person to be able to see the maintenance history, and current health of a given asset.

And that’s so important, because it makes that data accessible and useful for the people that matter. Otherwise, it becomes data that is buried away, that you can’t unlock value from.